What Cyber Leaders Told Us About Managing AI Risk in 2026

We are at an inflection point in cybersecurity—one that is unfolding faster and across more dimensions than anything we’ve experienced before. AI is no longer a theoretical capability or a future‑state aspiration. It’s rapidly reshaping the global economy and with it the security and risk landscape. Generative and agentic AI capabilities are being deployed inside enterprises faster than security teams can establish control frameworks, ownership models, or risk boundaries. This challenge is compounded by a noisy marketplace where AI‑themed vendors are flooding the ecosystem, fueled by aggressive investment and hype‑driven positioning. Cyber practitioners are inundated with claims of “AI‑powered” differentiation and cannot reasonably evaluate innovation from noise at this velocity.

So how are CISOs and their teams managing AI risk?

Research with members of CRA’s CyberRisk Collaborative – whose mission is to strengthen the cybersecurity workforce through professional and leadership development – surfaced the following priorities:

Security Operations

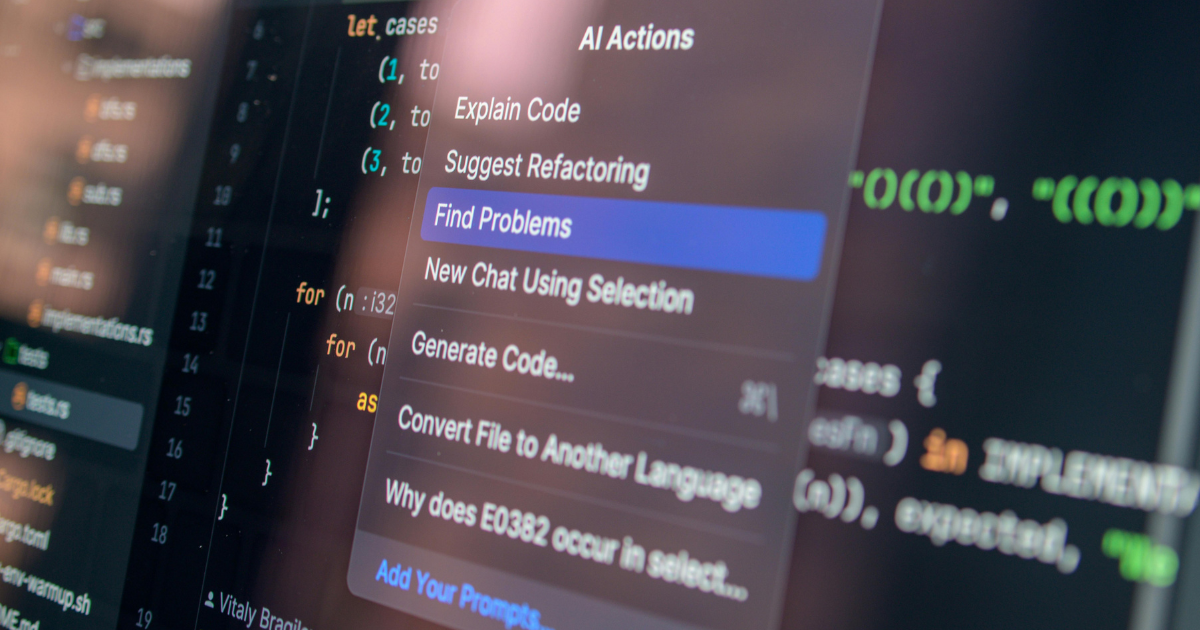

AI is reshaping how security operations function, shifting from manual, reactive workflows to augmented and increasingly automated models. While human judgment remains central, members tell us that AI is accelerating and restructuring key phases of the SOC lifecycle:

- Behavioral and model-driven detection augment signature-based approaches, identifying subtle patterns and correlated signals that evade traditional rules

- Automated enrichment and triage reduce alert fatigue, standardize investigations, and free analysts to focus on high-impact incidents

- Contextual reasoning and natural-language summarization compress investigation time and improve reporting clarity

- Constrained, agentic workflows execute pre-approved containment and remediation actions at machine speed, with governance guardrails and human oversight

It’s important to note that the net effect is not the elimination of security analysts, but the elevation of their role—from manual triage to decision authority, detection engineering, and resilience strategy.

Shadow AI

Alongside formal AI initiatives, leaders are confronting a parallel and less controlled development: Shadow AI. Generative tools, copilots, embedded APIs, and autonomous agents are being adopted across business units without formal approval or governance. In many organizations, usage is spreading faster than visibility and policy frameworks can keep pace.

Members describe several consistent risk patterns emerging:

- Sensitive or proprietary data being entered into public models without clear retention or usage controls

- AI-generated outputs influencing decisions without validation, traceability, or accountability

- Unsanctioned integrations introducing new API keys, connectors, and attack surface

- Compliance exposure where data handling, privacy, or regulatory obligations are not clearly addressed

Unlike traditional shadow IT, Shadow AI operates inside everyday workflows—often invisible to centralized security teams. The issue is not intent, but unmanaged acceleration. Leaders emphasize that the priority is not prohibition, but bringing AI usage into a governed, visible framework before risk scales beyond control.

Agentic AI

Much like Generative AI consumed tech and cyber conversations in 2025, Agentic AI will dominate conversations this year. These systems create content, make recommendations, summarize sensitive data, and—in some cases—take autonomous actions. This raises new questions about reliability, bias, data leakage, model abuse, and unintended behavior.

Addressing these risks requires a modern governance framework built on:

- Human approval for high‑impact or irreversible system actions

- Policy‑embedded guardrails that constrain automated workflows

- Clear lines of ownership for AI‑influenced decisions or outcomes

- Auditability of AI-generated insights, recommendations, and actions

Managing agentic AI risk is essential for business resilience and our national security more broadly. And once again, our members emphasized how this increases the need for human oversight, not the opposite.

The Path Forward: Community, Process, Technology

Given the scale and speed of change, organizations need a structured, disciplined approach to integrating AI risk management into their security strategies:

- Strengthen the foundation: AI performance is directly tied to data quality, architectural coherence, and governance maturity. Organizations must close visibility gaps and normalize telemetry before expanding AI-driven automation.

- Bring AI usage into the light: Shadow AI cannot be eliminated, but it can be governed. It starts with communication and education of every employee with access to your network and data. Approved tools, clear policy, monitoring, and employee guidance are other essentials to mitigate exposure.

- Define autonomy boundaries: As agentic systems expand, organizations must clearly define what actions can be automated, what requires human approval, and who is accountable for outcomes.

- Align at the executive level: AI risk is now a board conversation. Security leaders must articulate exposure, control posture, and tradeoffs in business terms—not technical ones.

But above all, navigating the path ahead requires us to acknowledge a simple truth: everyone is figuring this out at the same time.

Unlike more established cybersecurity domains—where decades of standards, regulatory frameworks, and maturity models exist—AI security is still in its infancy. NIST’s AI Risk Management Framework is an important starting point, but the depth and breadth of prescriptive AI standards simply do not exist yet.

This makes peer learning and shared lessons an essential part of any credible roadmap. Across our community, we consistently hear that some of the most actionable insights come not from theoretical models but from what fellow CISOs have already tried, broken, corrected, or proven effective.

The path forward is clear: in a space with no settled playbook, managing AI risk starts with community.

.png)

.webp)